Ethical AI Boundaries in Healthcare

What AI Should and Should Not Be Used For

Lavette Minn

1/5/20262 min read

Artificial intelligence is becoming deeply embedded in healthcare operations, marketing, and communication. While AI offers efficiency and insight, it also raises important ethical questions, especially in environments where patient trust and safety are paramount.

Understanding ethical AI boundaries is not optional. It is essential.

Why Ethical Boundaries Matter in Healthcare AI

Healthcare differs from other industries because decisions directly affect human well-being. AI tools that are used without clear boundaries can unintentionally create harm, confusion, or risk.

Ethical AI boundaries ensure that technology supports healthcare professionals rather than replacing judgment, care, or accountability.

Without these boundaries, organizations may face:

Patient trust erosion

Ethical conflicts

Compliance exposure

Reputational damage

Overreliance on automation

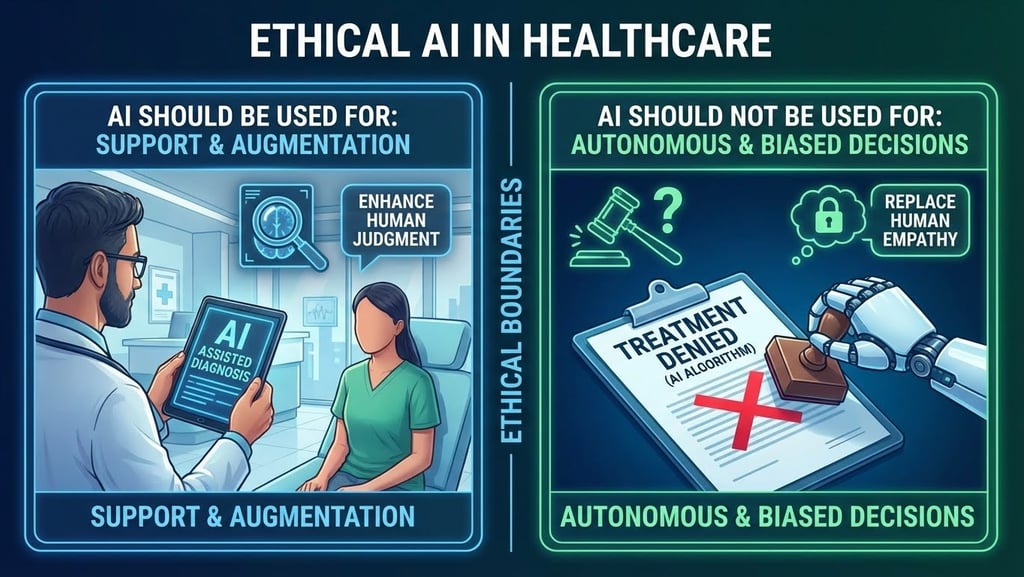

What Ethical AI Use Looks Like in Healthcare

Ethical AI use is intentional, limited, and supervised. It prioritizes patient dignity, data protection, and professional responsibility.

Appropriate AI use in healthcare may include:

Administrative workflow support

Operational efficiency and analysis

Documentation assistance

Educational content development

Internal process optimization

In these areas, AI functions as a support tool, not a decision-maker.

What AI Should Not Be Used For in Healthcare

Clear boundaries protect both patients and organizations.

AI should not be used to:

Diagnose medical or mental health conditions

Replace clinical judgment or treatment planning

Provide medical or therapeutic advice

Make autonomous patient care decisions

Interpret protected health information without safeguards

These responsibilities require human expertise, accountability, and ethical oversight.

The Risk of Over-Automation in Healthcare Communication

Automation can improve efficiency, but excessive automation can feel impersonal or unsafe in healthcare contexts.

Risks of over-automation include:

Loss of empathy in patient communication

Inaccurate or inappropriate responses

Reduced trust in digital interactions

Ethical discomfort for patients

Ethical AI strategy balances automation with human review and discretion.

How Compliance and Ethics Intersect in AI Strategy

Ethical AI boundaries work hand in hand with compliance-aware planning.

A responsible approach considers:

Data privacy and protection

Transparency in communication

Clear usage policies for staff

Documentation of AI-supported workflows

Ongoing monitoring and review

This intersection protects organizations while supporting innovation.

The Role of Leadership in Ethical AI Adoption

Ethical AI adoption begins with leadership.

Healthcare leaders must:

Define acceptable AI use cases

Establish clear internal guidelines

Educate teams on limitations and risks

Encourage critical thinking over automation

Maintain accountability for outcomes

Without leadership oversight, AI adoption becomes reactive rather than strategic.

Why Ethical AI Boundaries Support Healthcare Branding

Brand trust is built on responsibility and transparency.

When healthcare organizations communicate clear ethical boundaries:

Patients feel safer and more respected

Messaging remains honest and accurate

Digital experiences feel intentional

Brand credibility is strengthened

Ethical AI use reinforces a brand’s commitment to care, not convenience.

Who Should Prioritize Ethical AI Strategy

Ethical AI strategy is especially important for:

Mental health providers

Medical and specialty practices

Wellness and concierge healthcare brands

Public health organizations

Healthcare startups building credibility

These organizations benefit from clarity before implementation.

Moving Forward with Responsibility

AI will continue to evolve, but ethical responsibility remains constant.

Healthcare organizations that define boundaries early are better equipped to adapt, innovate, and scale without compromising patient trust or professional integrity.

Ethical AI use is not about limitation. It is about leadership.

Final Thoughts

AI is a powerful tool, but it must be guided by ethics, education, and strategy. Clear boundaries ensure that AI supports healthcare rather than reshaping it in unintended ways.

Responsible use protects patients, teams, and brands alike.

Start with Strategic Assessment

Every engagement with Blue Diamond Brandz™ begins with Digging for Diamondz, a complimentary 20-minute consultation designed to assess AI boundaries, compliance considerations, and strategic opportunities.

Book your Digging for Diamondz consultation to get started.

LETS CONNECT

Building brands with strategy and healthcare expertise.

contact me

stay in the loop

info@lavetteminn.com

800-687-7812 ext 1000

© 2026. All rights reserved. Designed by Blue Diamond Brandz